Humans have created detailed scientific maps of the brain since Sir Christopher Wren illustrated Cerebri anatome (1664), the first rigorous dissection-based anatomy of the brain. But it is only recently that such images have begun to play a larger role in how ordinary people make sense of everyday life. Dating roughly to the ‘Decade of the Brain’ announced by the then US president George H W Bush in 1990, high-resolution brain images have been widely trafficked, from popular magazines to the courtroom.

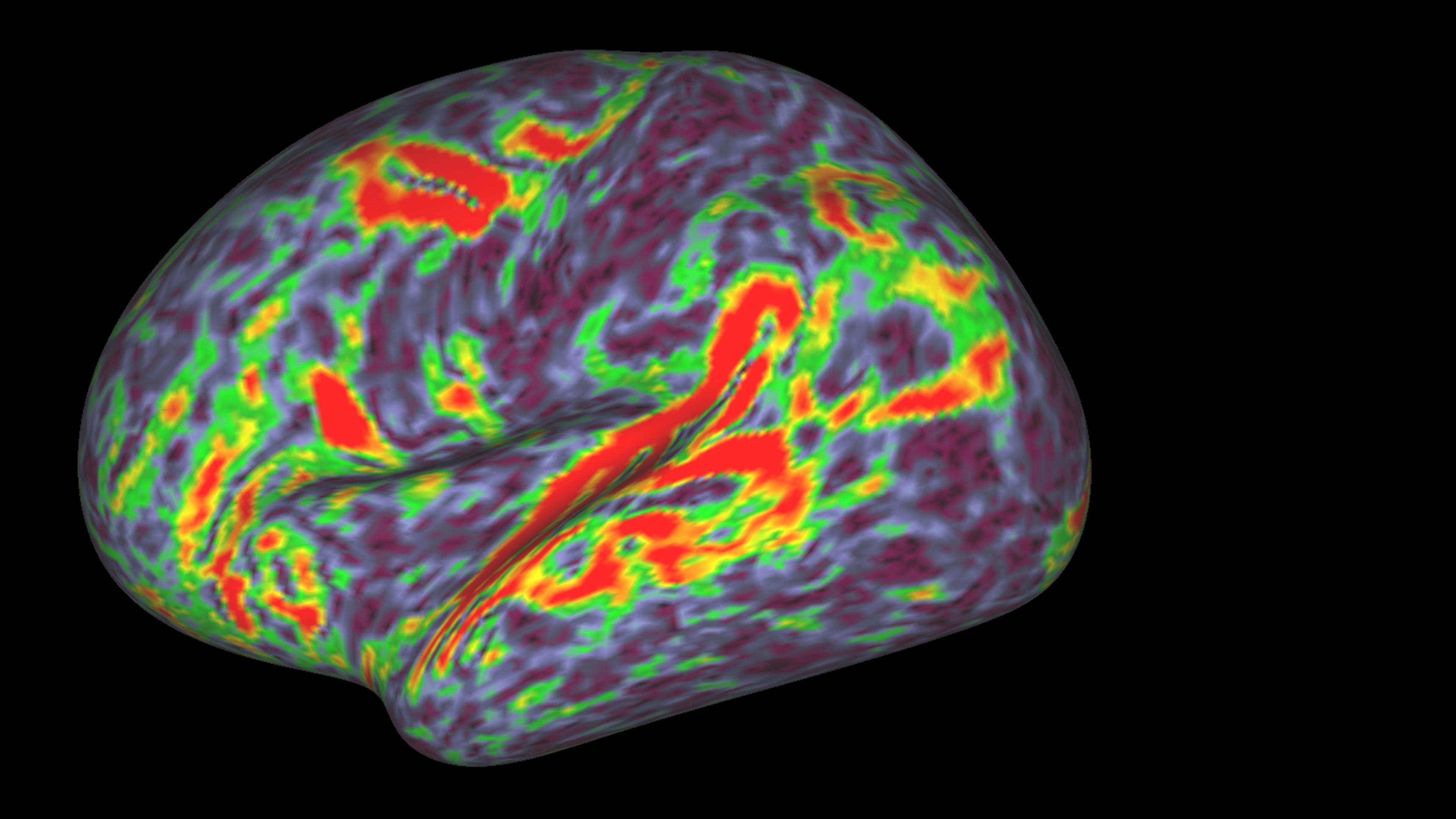

Glossy, high-res images of brain activity are created through a variety of techniques, including positron emission tomography (PET) and functional magnetic resonance imaging (fMRI). As a research tool, the power of PET and fMRI scans rests on their ability to show what is occurring inside the skull, tracking how neural regions activate in response to experimental stimuli. Brain scans seemed to hold the promise of revealing the ‘real’ neural basis of human subjectivity, and the method quickly began to travel outside of laboratory research contexts.

The first appearance of brain scans in a courtroom occurred in 1992 when a defence team used a PET scan to argue that the defendant’s murder of his wife was caused by a large arachnoid cyst on his frontal lobe. The use of this image sparked controversy about its admissibility in a trial where jury members are expected to make sense of the evidence. At stake in this controversy was the fact that these sorts of images wield a powerful rhetorical heft, a phenomenon bioethicists and critical scholars have tried to describe with neologisms such as ‘neurorealism’ and ‘neuroessentialism’.

While both fMRI and PET scans work on a similar basic principle, fMRI has become the dominant tool in contemporary cognitive neuroscience because it’s easier to use and gives more precise results. PET scans use glucose as a proxy for brain activity, and require researchers to inject a radioactive isotope into the arm of participants to see where in the brain it is being metabolised. Meanwhile, fMRI is noninvasive and tracks blood oxygenation changes as a proxy for brain activity, allowing researchers to put subjects directly into the scanner.

By the 2010s, the widespread use of fMRI was provoking critical pushback not only from sociologists and philosophers, but within the neuroscientific community itself. In large part, these criticisms have focused on the ‘pipeline’ of methods and statistical frameworks that scientists use to analyse the data from fMRI experiments. Craig Bennett’s ‘dead salmon’ study of 2010 was the most famous shot across the bow. In it, a dead fish in a scanner was ‘asked’ to hypothesise what emotions people in pictures of social situations might be feeling, and showed what looked like meaningful neural activation in response to the task.

These results were possible because of the statistical techniques researchers use to make sense of fMRI data: fMRI images divide the brain into as many as 40,000 ‘voxels’ per scan, and data analysis tends to treat each such cubic unit independently when looking for activation. This means that at least 40,000 comparisons are being run on independent units, grossly multiplying the possibility for false positives, unless they’re filtered through a technique statisticians call ‘multiple comparisons correction’. The tradeoff is that such corrections threaten to erase what might be meaningful correlations. The resulting debate over whether false positives or false negatives are more dangerous has developed into a growing consensus that fMRI studies must be more transparent about their analytic methods: researchers should publish their ‘uncorrected’ data, and be clear about what algorithms and statistical methods they are using to analyse it.

This kind of critical discussion of statistical methods has become a defining feature of the fMRI research community, although much work remains to translate its implications to the public in terms that a nonspecialist can understand. But for the most part, even the work that does try to give them the tools for a critical approach tends to focus on the well-trod terrain of the statistical analysis. Left out of this picture is a discussion of how scientists construct the activities that a subject performs in the scanner. Beyond grasping the stakes of these statistical debates, then, public engagement with neuroscience would be less prone to overreaches of ‘neurorealism’ if equipped with a better understanding of how scientists go about constructing tasks that can model reality in the scanner.

An fMRI is not a picture of just any brain. It is the picture of the brain of a person who is lying flat on their back in a tube-like chamber the size of a casket – often equipped with a panic button in case an attack of claustrophobia sets in – performing a task designed by the research team. The setting is about as far from the sorts of ordinary situations you’d encounter in everyday life as you could imagine. Given these technological constraints, scientists come up with tasks for scanning subjects that will model as closely as possible whatever it is they are trying to measure. This might be the data or evidence behind research that later reaches us through a short news story that scientists have found the neurological basis of ‘creativity’ or ‘shame’.

Constructing a task is a delicate balance between three things: the thing you want to measure; the measurable entities you will use as a proxy for that thing; and the task you are going to use to measure that proxy entity. The thing you want to measure will be something like ‘empathy’, ‘impulsivity’ or the severity of a psychiatric diagnosis such as obsessive-compulsive disorder (OCD). But because you can’t measure those things directly, you have to construct proxies that you can measure. For something like ‘impulsivity’, you might choose to measure whether the subject’s decision-making responds to normal incentives. You then construct a task that will let you measure it: ie, you might pick a gambling task that offers higher and higher potential payoff, coupled to an anklet that delivers increasingly painful shocks in the event that the subject loses the bet.

Relating your object to your proxy to your task can require tradeoffs: a task might be an excellent measure of the proxy, but the proxy might be more hypothetically related to the thing you want to measure, or vice versa. Take the example of the multi-source interference task (MSIT), often used to measure ‘cognitive control’, which is in turn often used as a proxy for assessing the severity of diagnoses such as OCD. The MSIT presents the subject with slide after slide of three numbers, then asks them to switch between two different criteria for choosing the odd one out. Rapidly switching between these two tasks requires the subject to flip between decision-making structures, relying on what neuroscientists theorise is ‘top-down control’ of cognitive processes. So far, so good: the task and the proxy seem well and plausibly linked. But if MSIT is being used to measure severity of OCD or depression symptoms, it relies on a hypothetical link that maintains that OCD or depression is defined by a lack of top-down cognitive control. Put differently, the use of MSIT and most other fMRI tasks relies on this kind of toggling between object, proxy and task. It’s missing the point to think about these kinds of triangulations as ‘true’ or ‘untrue’ – rather, the reality of this kind of scientific experimentation is simply that the so-called ‘hard science’ relies on a hypothesis-dependent framing of the object you’re trying to measure.

Choosing a good task is not just a matter of constructing a hypothesis about how you are going to get at the thing you want to measure. More practically, a good task is one that shows a clear difference between the control conditions and the experimental conditions. Even the most elegant stitching of object to proxy to task is useless if you can’t tell the difference between control and trial, or if the difference varies so wildly between subjects that you can’t extract what the trial responses have in common. For imaging studies, the ideal task is one that activates a discrete region consistently between subjects, and, if a task can reliably activate the same region, it can make a task more attractive even if its relevance to the object is less certain. This fact partially accounts for the increasing popularity of MSIT, which reliably activates the cingulo-frontal-parietal cognitive attention network. Put differently, MSIT is attractive because it reliably ‘lights up’ a discrete system of structures in the brain that seem to be involved in decision-making and attention; this means that the effects seen are far less likely to be false positives à la the dead salmon. In turn, this increases the likelihood that this task will be used as a proxy for more objects – not necessarily solely because it seamlessly captures whatever object is being modelled.

Neuroscientists are far from naive about these considerations in constructing tasks, and there is a long pipeline for tasks, from being invented around a lab meeting-room table to being accepted as a standard measurement. For a task to reach this level of standardised acceptance and use, it has to go through many stages. Because of incentive structures in scientific publishing that do not reward straightforward replication, after a new task is first published, other scientists will modify or expand the use of the task – perhaps adding a component to the task or using it with a new population. After a task reaches a mid-tier acceptance range, scientists who want to champion its use will talk about how to standardise and apply the task. These types of discussions can result in ‘consensus papers’, or collective statements by scientists about how a task should be used, attesting to its validity. Not all consensus papers are created equal; a consensus paper appearing in Nature will have more weight than one in a smaller regional journal. For instance, despite an influential community of researchers championing MSIT, no consensus paper has yet emerged, partially because where it appears will change the viability of the task’s broader acceptance.

Despite public enthusiasm for fMRI imaging, there is some scientific consensus around its limits, and neuroscientists often urge caution in how their results are conveyed to lay people. Yet still, as the bioethicist Eric Racine argues, excitement about the potential of fMRI often neglects its dependence on task selection. The fact of the matter is that what happens in the scanner is the construction of measurable proxies for ‘naturalistic’ reality. The tests are delicate, and the results must be interpreted with scepticism and caution. As Racine writes: ‘Heightened awareness of these challenges should prevent hasty neuropolicy decisions and prompt careful consideration of the limitations of fMRI for real-world uses.’ What Racine means by ‘neuropolicy’ is the application of fMRI outside of the laboratory, to do things such as assess a child’s academic potential in order to assign allegedly limited educational resources, or to adjudicate legal responsibility for an action. But neurorealism, or the putative popular authority of fMRIs, makes caution an uphill battle.

It’s true that fMRI is a valid scientific tool with manifold research uses illuminating what happens in the brain. It’s also true that deciding what fMRI images mean is fraught with multiple moments of contingency and interpretation. Deciding the meaning of these images is not a matter of straightforwardly reading results – even less so when it comes to what these images are made to mean in public discourse.