In 1937, the longtime Bolshevik leader Georgy Pyatakov was tried in Moscow for treason, sabotage and other alleged crimes against the Soviet Union. He gave a false confession, declaring: ‘Here I stand before you in filth, crushed by my own crimes, bereft of everything through my own fault, a man who has lost his Party, who has no friends, who has lost his family, who has lost his very self.’ He was executed for his alleged offences shortly thereafter – and he was one of many.

How had the Soviets persuaded so many defendants to testify to such lies? Some observers were convinced they had developed a secret tool for coercion. It wasn’t just that they appeared able to force people to say things, but that their victims actually appeared to believe the lies. The Soviets used a number of techniques to obtain confessions. They were not shy about resorting to brutality; some of the signed confessions found in the archives reveal bloodstains. The accused were repeatedly interrogated until they felt like ‘automatons’. They were kept isolated in solitary confinement, but well within earshot of other prisoners who were beaten.

There was initially no widely used term to explain such attempts at forced persuasion, but that changed in the context of Mao Zedong’s victory in China and the Korean War some years later. Edward Hunter, a wartime propagandist with the US Office of Strategic Services, is credited with coining the flamboyant term ‘brainwashing’ in 1950 to account for mysterious confessions extracted from Western prisoners of war by the Chinese government. After brutal treatment, some victims not only confessed to unlikely charges but also appeared to be sympathetic to their captors. Brainwashing later made a prominent appearance in the film The Manchurian Candidate (1962), in which the evil Dr Yen Lo explains to a Communist co-conspirator that his American prisoner has ‘been trained to kill and then to have no memory of having killed … His brain has not only been washed … it has been dry-cleaned.’

With such origins, it is no wonder that the concept of brainwashing is criticised: it all seems so musty, reeking of the Cold War and questionable science. Some note that the concept has been used as a rhetorical weapon to attack followers of new movements (‘Ignore them; they must have been brainwashed to believe this nonsense’). Others dislike the term because it seems to blame victims for being gullible and weak, even though some victims of apparent brainwashing have included very gifted individuals. People lose sight of the fact that this form of dark persuasion occurs in extremis, when people are literally at their wits’ end.

The philosopher Ludwig Wittgenstein observed that ‘a new word is like a fresh seed sown on the ground of the discussion.’ Hunter’s new word might as well have been crabgrass: it crowded out more reasonable alternatives. Some experts have preferred the term ‘coercive persuasion’ to explain the unusual behaviours observed among prisoners of war, cult victims and hostages who have paradoxically sided with their assailants or made palpably false and self-destructive confessions. Despite experts’ research on the subject, many still regard brainwashing as a bubbe-meise, a ghost story from the past.

‘Coercive persuasion’ – which I’ll use interchangeably with ‘brainwashing’ – is a much harder term to argue with. It’s obvious that people can be forced to do things, but it also appears that people can be coerced into believing things under certain conditions. We see this all too frequently in instances of false confessions, Stockholm syndrome (in which a hostage develops a loyalty to their captor), and cults that foster various religious and political ideas. There is even concern today that social media has become coercively persuasive, indoctrinating people to believe preposterous things (eg, ‘vaccines cause autism’, ‘COVID-19 isn’t worth worrying about’). Can social media contribute to coercive persuasion? To answer that question, one needs to more clearly define the sorts of situations that produce it.

Dogs at the Imperial Institute of Experimental Medicine, St Petersburg, in 1904. It was here that Pavlov did much of his famous work. Photo courtesy the Wellcome Collection

The Russian physiologist and Nobel Laureate Ivan Pavlov could be called the father of coercive persuasion science. When the Neva River in St Petersburg flooded in 1924, Pavlov gained unique insights into the effects of trauma on behaviour. The river inundated his canine lab and almost drowned his caged dogs. Pavlov observed that the dogs were not the same afterward – they forgot their learned behaviours, and the trauma changed their dispositions. Ever the experimentalist, Pavlov re-exposed one of his traumatised dogs to a trickle of water in the experimental chamber, which again disrupted the dog’s trained response. With his enormous patience and skill, Pavlov could condition a dog to respond to one specific note on the musical scale. The fact that trauma could disrupt such precise training seems to have fascinated him.

The Soviet leader Lenin was enchanted by the potential for Pavlov’s approach to be used to influence the Russian people. And the Soviet government provided financial support for a research institute with hundreds of staff. Pavlov observed that sudden stress immersion, sleep deprivation, isolation and methodical patience all contributed to the alteration of dogs’ behaviours.

Glimmerings of brainwashing surfaced during Stalin’s show trials in the 1930s; during the 1940s when wartime governments searched for drugs to speed interrogation; and in the early Cold War years when they raced to persuade enemies to defect. Throughout the 20th century, governments invested heavily in research on coercive persuasion. Government agencies, foundations and universities collaborated on extensive studies of the use of drugs to change behaviour or extract information. They also demonstrated that sensory deprivation and sleep restriction increased suggestibility. For example, in one line of research, subjects listened to persuasive audio recordings about a particular topic (such as the country Turkey, or parapsychology), and some of them did so while placed in a dark and quiet sensory-deprivation chamber. After their experiments were concluded, volunteers in the sensory-deprivation condition tended to show greater interest in the topics and, in some cases, greater influence from the recordings.

I wish I could say that all such studies used volunteers, but some experiments involving drugs were performed on unwitting victims. In the ‘midnight climax’ study of the 1950s-’60s, CIA operatives rented an apartment on Telegraph Hill in San Francisco and hired prostitutes to slip LSD into their customers’ drinks to see if they would confide information more readily.

Thus, brainwashing has a more substantial history than is apparent at first glance, but it will always seem fictitious unless there are clear ways of assessing it. So what are its defining and measurable properties? First, coercive persuasion typically involves surreptitious manipulation – individuals might not even know they are being targeted. Further, actions are taken at the expense of the targeted individuals; someone else benefits from the manipulation. Some degree of sleep deprivation is often part of the regimen, leaving the victim fatigued, confused and suggestible. And coercive persuasion seems to be more effective when the victim is isolated from other views and beliefs.

As a thought experiment, let me suggest how these features might be rated and examine how they appear in documented instances. There are countless examples of purported brainwashing, but many experts would include confessions by the Bolsheviks during Stalin’s ‘Moscow Trials’ of the late 1930s and the behaviour of the Hungarian cardinal József Mindszenty during his show trial in 1949. Most would also label the treatment of some US POWs in the Korean War and some of the victims of the CIA’s infamous MKUltra experiments of the 1950s-’70s as instances of attempted brainwashing – one MKUltra-funded programme in Montreal subjected people to intense electroconvulsive treatment, repeated doses of LSD, and a quarter of a million or more tape loops suggesting how to change their behaviour.

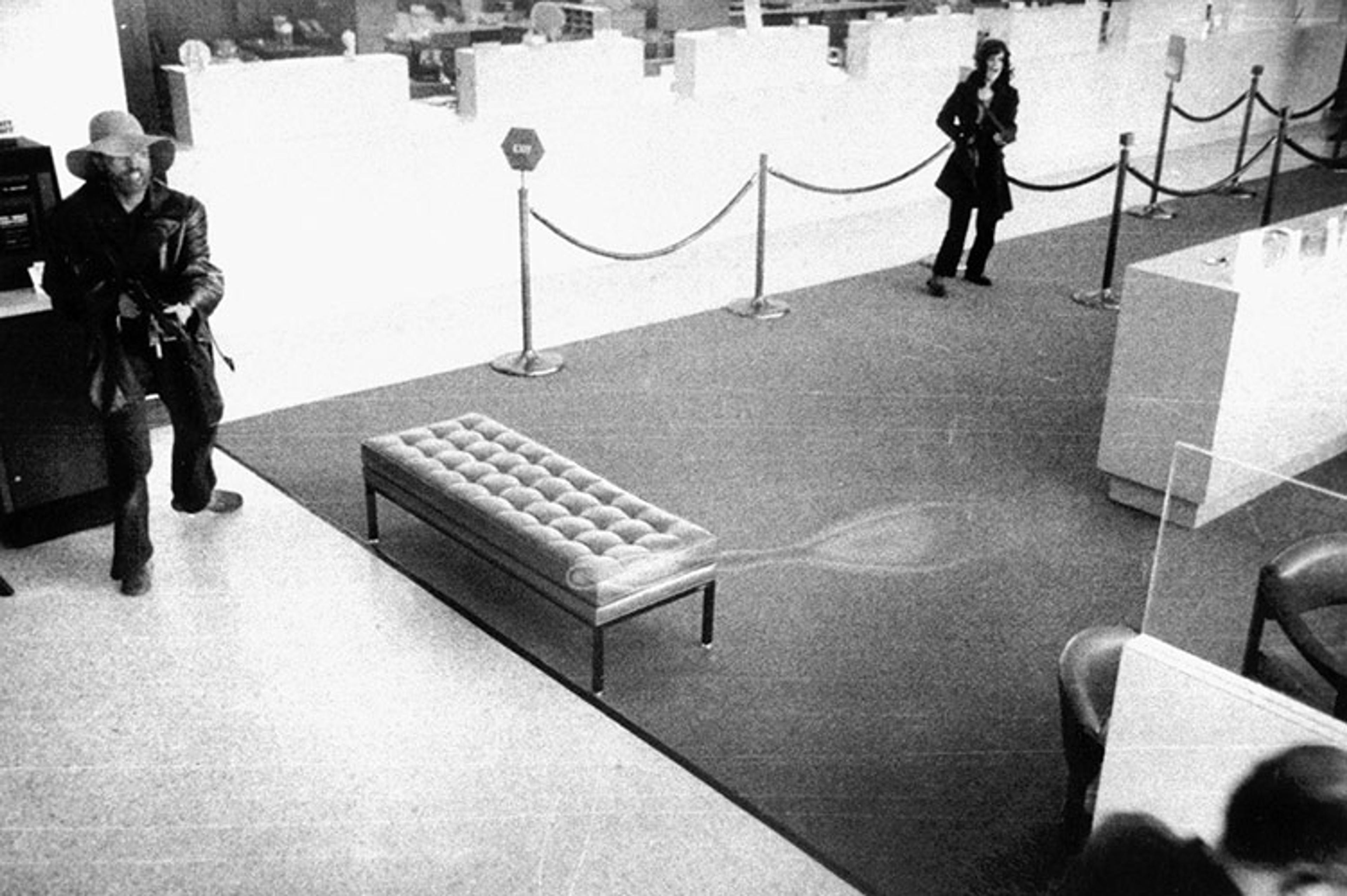

Patty Hearst (right) yells instructions to customers during a bank robbery at the Hibernia bank in San Francisco, 15 April 1974. Courtesy Wikipedia

Various examples of hostage behaviour also seem sensible to consider – including cases such as the kidnapping and conversion of Patricia Hearst by the Symbionese Liberation Army in 1974. Finally, it is fitting to consider lethal cults such as Jonestown and Heaven’s Gate. Based on my reading, these instances would score highly on the putative variables that define coercive persuasion, whereas instances of ‘mere’ persuasion would have low scores.

In evaluating each of these unhappy instances, I ask a series of questions:

- Was sleep altered? Sleep is commonly manipulated by interrogators, hostage-takers and cults. Cult initiation ceremonies are sometimes held after a period of sleep deprivation. When sleep is curtailed, judgment deteriorates and people become more suggestible. One can rate the severity of the sleep manipulation by how frequently, consistently and thoroughly it is imposed.

- Was there evidence of coercive isolation? Sensory restriction and isolation from friends and family are crucial for coercive persuasion. One can rate the extent of this isolation. Is the victim forbidden to leave the premises or to communicate with others, or merely discouraged from contacting outsiders?

- How much surreptitious control was imposed? Were the victims given disinhibiting drugs – once or many times, surreptitiously? Were they forced to participate in guilt-inducing, demeaning rituals or subjected to harsh group criticism?

- Was the participation in the victim’s best interest or someone else’s? Coercive persuasion commonly takes place in settings where the victim’s freedom and safety are jeopardised and where someone else benefits from the subject’s degradation. One could rate this feature based on the extent to which the persuasive tactics involve imprisonment or jeopardise safety.

When more of these conditions are evident, it is more likely that victims have been subjected to coercive persuasion, as opposed to ‘just’ propaganda or indoctrination. Take Jonestown, for instance. The residents of this commune in Guyana were physically restrained from leaving, and communication with outsiders was sharply limited. They were forced to make demeaning public confessions and were chronically sleep-deprived. When it ended, more than 900 individuals died, sacrificed by their leader, Jim Jones.

It would be inaccurate to portray coercive persuasion as some sort of 20th-century aberration. The recent rise and fall of the Nxivm cult in New York suggests that dark persuasion persists. Court testimony and reporting revealed that the organisation involved, among other things, branding and sexual exploitation of female members. Even after their leader, Keith Raniere, was sentenced to 120 years for abusing women physically, emotionally and financially, some of his acolytes danced outside the prison, protesting his incarceration.

The presence of coercive persuasion in one context or another is not a black-or-white distinction. There are contemporary situations that raise questions about new directions in persuasion. As noted earlier, one wonders if coercive persuasion can be conducted via social media. That question needs careful study. Can social media be used to isolate people, coerce or shame them? Is such manipulation surreptitious or is it above-board? Can social media encourage people to risk their safety and enrich others? Can it interfere with the sleep of its adherents? One of the few things that many conservatives and liberals seem to agree on is the darkly persuasive capabilities of social media.

We have a century of observations demonstrating that people can be coerced to do things and coercively persuaded to believe things. It would be a mistake to dismiss such observations. If we ignore the potential developments of brainwashing in the 21st century, we will be defenceless against it.