Do you remember colouring as a child, striving to stay within the lines, then feeling frustration, disappointment, maybe even shame, when you made a mistake and your strokes smeared across the borders? From a young age, we encounter error and the unpleasant feelings that it brings. We learn that what we expect or attempt and what we get are not always the same, and so we try to bring the two closer together. We see this in the stumbles and the falls that precede walking. Later, in school, errors carry a more formal penalty, red scars criss-crossing worksheets and essays, telling us to change something, because our prediction of what was expected does not match reality.

Moments of life such as these, strung along the thread of our timeline, teach us to change our thinking, our expectations, to align with the world out there. Less often and usually with less success, we might change the world to meet our expectations. Either way, from all this arises the prevailing view that mismatch and error are a bad thing, a crack in the sidewalk to avoid stepping on. But error inherently carries surprise with it, which can be good as well as bad. At the extreme, consider an unexpected pregnancy and what it might bring. This agnostic feature of surprise is why I say we should reconsider error as a potential source of joy, not merely an undesired outcome.

Uncertainty, error, difference, surprise – you might view these words as conceptually distinct, but in computational frameworks they are bound together. One cannot think about uncertainty without invoking probability. If you know the probability of an event, you can compute its associated uncertainty (its variance, or entropy). When the outcome is revealed, you can compute its associated surprise based on the probability of its occurrence. In brief, the more unlikely an event, the more surprised you will be should it happen. It follows that error is simply the difference between what we expect and what is true, which is what evokes surprise.

Consider making a decision about a takeout meal this evening, between burgers and pizza, using a coin toss. Either outcome, heads or tails, is equally likely and therefore not especially surprising. Now consider using a nifty six-sided die to select between six takeout options. Any one side will result in more surprise than if you were deciding between two options alone, because your expectation of any one outcome was lower to begin with (a 1/6 chance of occurring rather than 1/2 with the coin). What has happened between the coin and die tosses? You have increased the range of possible outcomes; in computational terms, you have increased your entropy, and thereby the initial uncertainty, and your eventual surprise.

The surprise experienced with the six-sided die is tied to moderately improbable events that are nonetheless obviously possible – that is, all the possible outcomes exist in your internal repertoire of knowledge. But suppose an event occurs that you have no prior context for, such as a heavy scaffolding pole suddenly dropping from the sky above you? Here, you’ve encountered total novelty, an event with no previously known probability. If you survive, you will swiftly fit this new item into your knowledge schema for urban environments. In this sense, uncertainty, error and even surprise may represent states you wish to minimise – you don’t want to be caught out by any falling scaffolding in the future – and current work in neuroscience and machine learning would agree with you. But I believe there is more to the story of error than this.

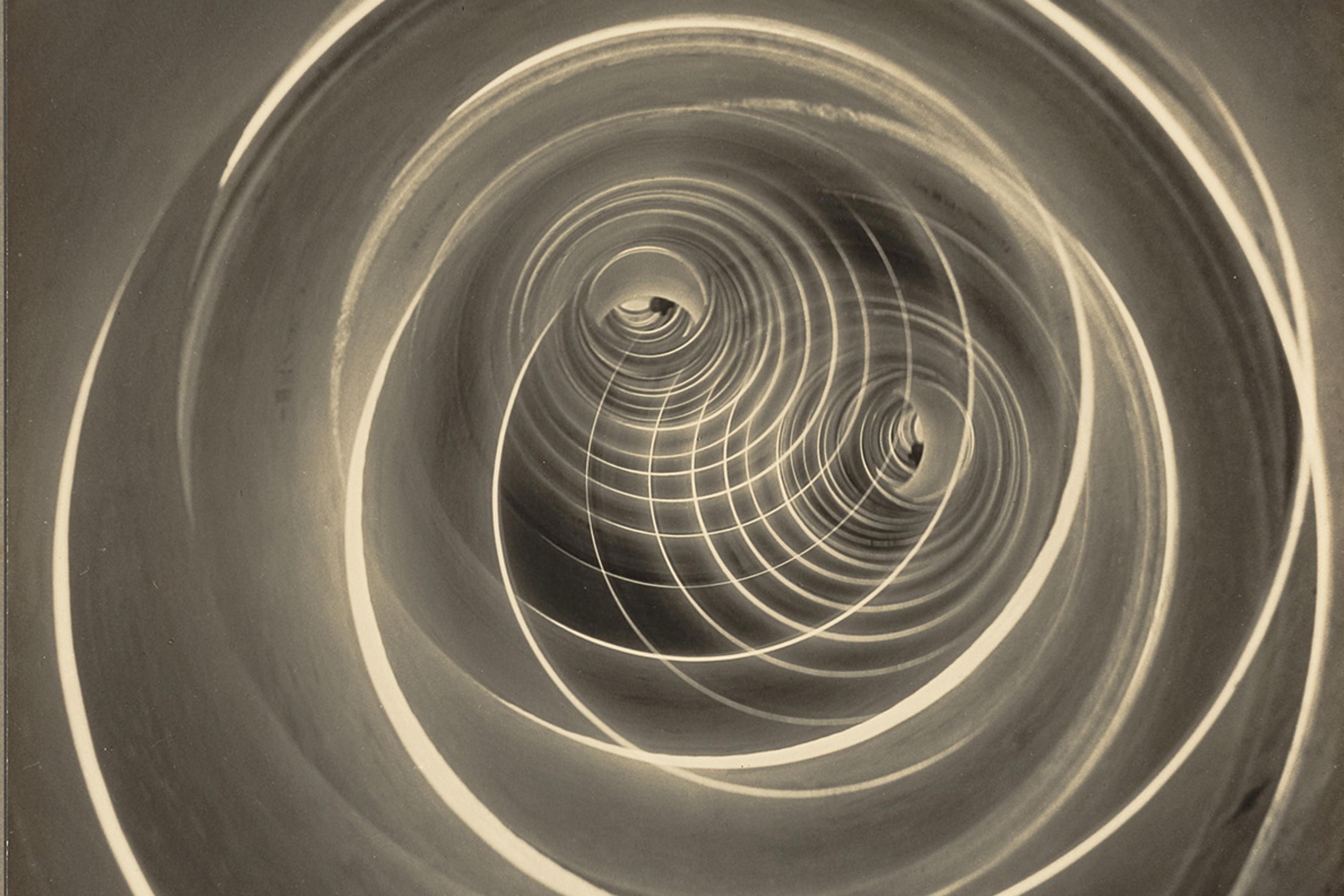

Seminal theories in neuroscience cast the brain as a prediction machine. In helping us navigate the world, the brain makes constant predictions about what is likely to happen next, including as a result of our own actions. These predictions are derived from prior experiences and their value is clear – they allow us to overcome the sluggishness in our sensory inputs and motor outputs, and to minimise surprise, which can be costly.

Think of the effort involved – cognitive, motor, even emotional – in slamming on the brakes when an animal dashes in front of the car you’re driving. The idea is that the brain computes both a prediction about how likely it is that something will suddenly appear in the road and a confidence in its prediction (that acknowledges the prediction’s imperfection), and that these predictions inform various decisions, such as how fast to drive and how vigilant to be. Once the outcome is revealed – the journey is uneventful or not – the brain computes the difference, or error, between reality and its prior prediction, which serves as a learning signal that updates the brain’s previously held hypotheses, hopefully improving prediction accuracy in the future.

According to this view, when an error occurs, information is gained; and the brain, as it perambulates its environment, continuously updates its model of the environment, predicting and testing, encountering errors and revisiting its prior premises. It might seem jarring to think that our brains compute complex probabilities in this way, even while ‘we’ might struggle to do the same at a conscious level, yet that is what the research suggests. The brain, in seeking to live happily ever after, goes after uncertainty- and surprise-minimisation, to avoid the more taxing challenge of facing the unexpected.

Some have taken this line of research so far as to suggest that the brain is fundamentally an error-minimising machine. But it doesn’t take a neuroscientist to think twice about that neat conclusion. As others have pointed out, the problem with casting uncertainty- and error-minimisation as the apotheosis of human motivation is that one could remain in an unsurprising state fairly easily, by staying in a proverbial dark room their whole lives. Why risk leaving the room and committing an error? Dwelling in the hypothetical dark room, reminiscent of Plato’s cave, is understandable – it’s scary out there – but we also know it is no way to live.

Beyond philosophical thought experiments, we regularly bear witness to humans going out in search of both the novel and the unexpected, baring themselves to the onslaughts of error, driving progress. We try out new things – haircuts, foods, jobs – knowing they might not work out. Some of us, such as the hitchhikers and mountain climbers of this world, are more risk-seeking than others, daring the unknown to come at them. But other risky acts are far more common – I’m thinking of anyone who chooses to have a child, in spite of the entropy in meiosis.

Babies are born explorers motivated by an instinctual – that is, neurally embedded – curiosity. Endowed with young inference-machine brains and only a limited model of the edible world, infants will deliberately sample all sorts of items as soon as they can grasp objects. These observations all challenge the error-minimisation drive and suggest that humans are also error-seeking.

Error-minimisation also drives progress in machine learning and indirectly in artificial intelligence, where the aim is to offload, and perhaps enhance, human prediction – but here too the goal of error-minimisation quickly becomes problematic. Machine learning algorithms reduce error by being highly reliable, yet while they might appear objective and mathematical in nature, they are only as effective as the data fed into them by humans. Human bias therefore becomes encoded into algorithms, which has led to the perpetuation of racial prejudice in areas such as job recruitment, criminal profiling, hairstyling and financial services.

Let me further illustrate the problem with error-minimisation, whether in the context of a machine learning algorithm or a surprise-avoidant human. Suppose you set out to open a restaurant and, to maximise success, you find out people’s favourite foods. You will end up opening a pizzeria. Congratulations, you have minimised your loss function. Now imagine this process is repeated by friendly competitors. We would be faced with strings of eateries serving the same foods. In fact, we can bear witness to this result in the restaurants available to us, because pizza, on average, works. But Noma, the best restaurant in the world according to Restaurant magazine in 2021, has moss on the menu. Its founders strayed from the expected, actually aimed for error, and won. Would a machine learning algorithm have predicted that? How about an error-minimising brain? And why is Noma listed as the best restaurant in the world? Is it that moss is uniquely delicious relative to pizza? Or is it in homage to the restaurateur’s decision to throw uncertainty-minimisation to the wind?

More than a century ago, Fyodor Dostoyesvky made a similar observation, stating presciently:

[E]ven if man really were nothing but a piano-key, even if this were proved to him by natural science and mathematics, even then he would … purposely do something perverse out of simple ingratitude, simply to win his point. And if he does not find means he will contrive destruction and chaos …, only to win his point!

The beauty of error abounds. Humans learned to reproduce their visual experience through art, assigning value to accurate representations. But then the Impressionists came along and blurred lines, committing errors, introducing a new kind of beauty. Beneficial errors are not just the product of a human’s wilful spurning of rules. Think of genetic mutations that arise spontaneously, the code of life itself erring and yielding the glory of red hair, adding a new possibility in the range of human physiognomy. Increasing the entropy, the uncertainty or the variance of possibilities, in many instances, is known to strengthen a system, be it a gene pool, neural variability, or gut flora, challenging the idea that optimal life is sans-serif.

The screen I write on is framed with faded pink sticky notes, each bearing an Italian phrase or word, thoughtfully written down by my Italian-speaking colleague in my Italian-heavy research group. The one in the lower left-hand corner says divertiti, the Italian expression for ‘have fun’. As you might surmise, divertiti’s root comes from Latin, meaning ‘to turn away’ (from the usual). Language itself carries the value of difference and the unexpected, equating it with enjoyment. Am I entreating you to commit error, whenever you can? Maybe a little.

But really what I recommend is that you hold space for the unexpected and the unknown. Holding space, lining the borders of our priors with wide margins, is a costly but worthwhile affair, especially if you value the uniquely human phenomena of mystery, laughter, wonder, vision and awe. So gamble sometimes. Consider the range of possibilities. Make room for error, before and after a decision. Click on an article you have no interest in. Mess with the algorithm. Swipe right on a ‘not your type’ one in 10 times. Increase the entropy in your options. Because error can be tragic but it can just as well be magic.