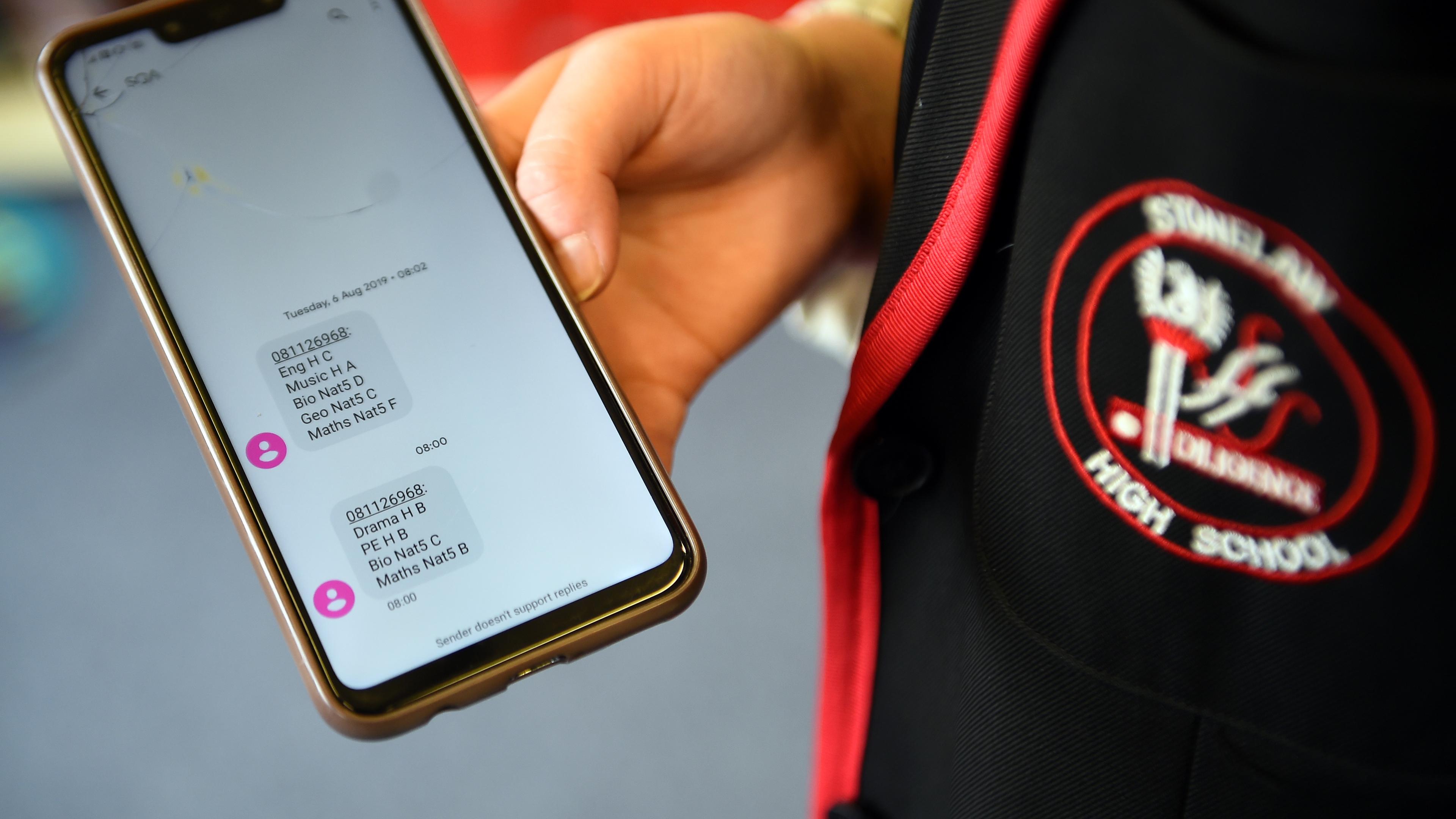

States and firms with whom we routinely interact are turning to machine-run, data-driven prediction tools to rank us, and then assign or deny us goods. For example, in 2020, the 175,000 students taking the International Baccalaureate (IB) exams for college learned that their final tests had been called off due to the COVID-19 pandemic. Instead, IB announced last July, it would estimate grades using coursework, ‘significant data analysis from previous exam sessions, individual school data and subject data’. When these synthetic grades were published, outrage erupted. Thousands signed a petition complaining that scores were lower than expected. Students and parents had no means to appeal the predictive elements of grades, even though this data-driven prediction was uniquely controversial. In the United Kingdom, a similar switch to data-driven grading for A-level exams, used for entrance to university, prompted cries of racial bias and lawsuit threats.

As the IB and A-level controversies illustrate, a shift from human to machine decision-making can be fraught. It raises the troubling prospect of a future turning upon a mechanical process from which one’s voice is excluded. Worries about racial and gender disparities persisting even in sophisticated machine-learning tools compounds these concerns: what if a machine isn’t merely indifferent but actively hostile because of class, complexion or gender identity?

Or what if an algorithmic instrument is used as a malign instrument of state power? In 2011, the US state of Michigan entered a multimillion-dollar contract to replace its computer system for handling unemployment claims. Under the new ‘MiDAS’ system implemented in October 2013, the number of claims tagged as fraudulent suddenly spiralled. Because Michigan law imposes large financial penalties on unemployment fraud, the state agency’s revenues exploded from $3 million to $69 million. A subsequent investigation found that MiDAS was flagging fraud with an algorithmic predictive tool: out of 40,195 claims algorithmically tagged as fraudulent between 2013 and 2015 (when MiDAS was decommissioned), roughly 85 per cent were false.

In both the grading and the benefits cases, a potent – even instinctual – response is to demand a human appeal from the machine as a safeguard. The Toronto Declaration – launched in 2018 by Amnesty International and Access Now – called for artificial-intelligence decisional tools to be appended with an ‘accessible and effective appeal and judicial review’ mechanism. Unlike machines, humans can be capable of nuanced, contextualising judgment. They’re capable of responding to new arguments and information, updating their views in ways that a merely mechanical process cannot.

Yet, as powerful as these grounds might seem, the resort to a human appeal implicates technical, social and moral difficulties that are obscure at first blush. Without for a moment presuming that machine-driven decision tools are unproblematic – they’re not – the idea of creating an appeal right to a human decision-maker needs to be closely scrutinised. That ‘right’ isn’t as unambiguous as it first seems. It can be implemented in quite divergent ways. Implemented carelessly, it could exacerbate the distributional and dignitary harms associated with wayward machine decision-making.

A right to a human appeal from a machine decision, such as the IB grade prediction or the MiDAS fraud label – can be understood in two different ways. It could first be translated into an individual’s right to challenge a decision in their unique case. I suspect that most people have this in mind when they think of a human appeal from a machine decision: You got my facts wrong, and you owe it to me as a person to correctly rank and treat me based on who I am and what I have, in fact, done.

Superficially alluring, this version of an appeal right leads to troubling outcomes. To begin with, there’s a substantial body of empirical work showing that appending human review even to a simple algorithmic tool tends to generate more, not fewer, mistakes. This finding, rightly ascribed to a 1954 paper by the psychologist Paul Meehl, was tendered in a historical context of crude statistical tools competing against putatively sophisticated clinical judgments. More than a half-century later, with machine prediction considerably refined, it still stands up well.

What of cases such as MiDAS that have staggeringly high error rates? In the same period as MiDAS was operational, human decisionmakers working with the MiDAS system had a roughly 44 per cent false fraud claim rate. This is far better than MiDAS itself, but on its own not much better than flipping a coin. The real question in the case of MiDAS is whether any cost-effective fraud-detection system exists that’s both sensitive and specific.

Moreover, an individualised right to human appellate review is likely to have unwelcome distributive effects. For example, different families dismayed by an exam prediction are unlikely to be similarly situated in their resources or sophistication. Some will be more capable of appealing than others. Without some well-intentioned advocacy group’s intervention, it’s likely that socioeconomic status and financial resources will correlate to the propensity to appeal. There’s no reason to assume that appeals will be made only in cases when the machine errs – or that a representative sample of errors will be appealed. Indeed, the appellate right’s effect might well be to cast concentrated error costs upon disadvantaged groups and communities.

In the educational context, this approach will limit, not expand, intergenerational mobility. Assume, for example, that A-level predictions were, as alleged, biased in favour of wealthier schools with fewer minority students. A system of individualised appeals not only likely enables a disproportionate number of wealthier students to challenge deflated grades, but it also leaves in place the overrepresentation of wealthier, whiter students in the pool of initially higher grades. Hence, the permission to appeal individual grades will strongly tend toward more, not less, regressive outcomes than a pure machine decision – even if the latter is error-prone.

But there is another way of understanding the same appeals right: this is a complaint, not that I have been wrongly classified, but instead that the algorithm ranking me is characterised by a systematic failure of capacity or function. This isn’t so much a claim to a correct decision as a claim to be treated by a well-calibrated instrument. It’s this version of the appellate right that we should embrace.

Any decisional mechanism, whether human- or machine-operated, will generate errors. An individualised appeals mechanism might reduce the volume of errors. But it might also increase it. Imagine if it’s largely wealthy parents of students (rightly) receiving low grades who lodge objections: they might secure (false) upward corrections from flawed human decision-makers. The net error rate would rise. Therefore, an effective review mechanism needs to focus not solely on discrete cases but on the overall performance of the predictive tool, and its capacity for improvement. This programmatic and systemic right of appeal would tee up the question of whether the algorithm had been designed to produce a high error rate – as is plausibly the case with MiDAS. It would also consider how errors are distributed, and determine whether vulnerable populations are subject to disproportionate burdens. Finally, it would demand that an algorithm’s designer justify design choices in light of the best available technology in the field.

The right to a well-calibrated instrument is best enforced via a mandatory audit mechanism or ombudsman, and not via individual lawsuits. The imperfect and biased incentives of the tool’s human subjects means that individual complaints provide a partial and potentially distorted picture. Regulation, rather than litigation, will be necessary to promote fairness in machine decisions.

For the most profound moral questions raised by human-to-machine transitions are structural and not individual in character. They concern how private and public systems reproduce malign hierarchies and deny rightful opportunities. Designed badly, a right to an appeal exacerbates those problems. Done well, it is a chance to mitigate – reaping gains from technology for all rather than only some.