On a recent wet and miserable morning in Dublin, I asked Alexa for a forecast of the day ahead. The device answered jauntily with the weather in Beirut, where it was a balmy 30°C. Was Alexa really having a laugh at my expense? As a computer scientist I knew this ‘blip’ had a more mundane explanation: automatic speech recognition is still far from perfect as a technology. But as a computer scientist with a keen interest in the algorithmic workings of humour, I was more excited than annoyed by Alexa’s behaviour.

Although we tend to worry about our devices (and the companies behind them) knowing too much about us, how great would it be if those devices could know us well enough to joke around with us like trusted friends? There are still as many landmines as roses on the path to genuinely witty machines, since even a disposable quip entails a complex web of decisions and presupposes a wealth of knowledge about people and the world. Nonetheless, I believe that our devices won’t truly deserve the label ‘smart’ until they know enough about us – as humans and as individuals – to occasionally act the fool.

But here’s the rub: when was the last time a computer really made you laugh? Our devices provoke a range of emotional responses, but mirthful joy is surely one of the rarest. It is hardly surprising that this fanciful notion of giving machines a real sense of humour ranks low on most technology wish-lists, not least because we suspect that our machines already hold us in such low regard. Still, AI researchers can cite more than the obvious reasons for trying, and for treating machine humour as no laughing matter.

First, a computational model requires us to put all our cards on the table. My shelves groan with insightful ‘how to’ books by professional comedy writers and, although most of their best advice comes in the shape of algorithms, there is always something missing, an X factor that only we humans can supply. When we turn these algorithms into software, there is no clear translation for the magical realism of ‘Step 3: do something clever.’ Code just doesn’t work that way, sadly. So we build our clockwork clowns to better understand what makes us tick.

This brings us to the second reason for trying: jokes surprise us by showing us what we already know, albeit from a fresh angle, and it turns out we know an awful lot. A sense of humour poses a tough test of how much an AI knows, and of how well – and how flexibly – it can use it. Because jokes highlight the limits of conventional thinking, AI researchers study humour so as to give our machines the same tolerance for creative incongruity. The third reason is just as practical: a humorous machine is necessarily attuned to human values, and has more social awareness of its users.

That being said, it is usually easier to get funding to research humour’s more sober kin, such as metaphor, analogy, irony or creative language more generally, than to tackle jokes head on. One humour-focused European project on which I collaborated was roundly condemned by politicians in Britain’s far-Right party UKIP, who declared it a waste of taxpayers’ money and yet another reason to exit the European Union. Much of my own group’s research has focused on aspects of humour with a clear business case, such as the detection of sarcasm and irony in social media and in online product reviews. When a machine fails to detect our humorous insincerity, it doesn’t just fall short of the right interpretation: it arrives at the worst possible misinterpretation of our feelings. So pity the poor machine that tries to gauge the mood about vaccines on Twitter, as a current project of ours is aiming to do, and encounters a Tweet like ‘JFK would still be alive today if he had been vaccinated.’ Just consider how much commonsense knowledge is needed to spot the antivax sentiment lurking beneath this parody of pro-vaccine talking points.

Whether we set out to master the sweetness of dad jokes, the ribaldry of schoolyard puns, the acidity of biting sarcasm or the delicacy of gentle irony, all joking requires us to first become a master of expectations. Intuiting what others will expect in a given setting, and to which conclusions they are most likely to jump, is the key to building up those expectations before dramatically knocking them down. This is why so many jokes turn on clichés and stereotypes. (Think of the famed moth joke told by the late, great Norm Macdonald.) A cliché is often the shortest path between two familiar ideas, but jokes take us the long way around. They make the expected seem fresh again, and surprise us by telling us what we already know. Comedians buy these commodities low so as to sell them high, albeit with a twist. They bank on the statistical regularities behind clichés and stereotypes to play a numbers game with an audience. But our computers can play number games, too.

To get a handle on how people think, a machine can first model how we speak. A statistical analysis of a large text corpus, such as a vast swathe of the web, will reveal that we prefer the wording ‘fish and chips’ over ‘chips and fish’, and we ‘put on our shoes and socks’ even if we actually put them on in the reverse order. Part of the comic appeal of Borat, Sacha Baron Cohen’s fictional character, for instance, is how he flouts these statistical norms to present an air of amiable but foreign cluelessness. Creative wordplay departs from the norm in ways that are deliberate, transparent and recoverable, and we can model this process by attuning a statistical language model to a large dataset.

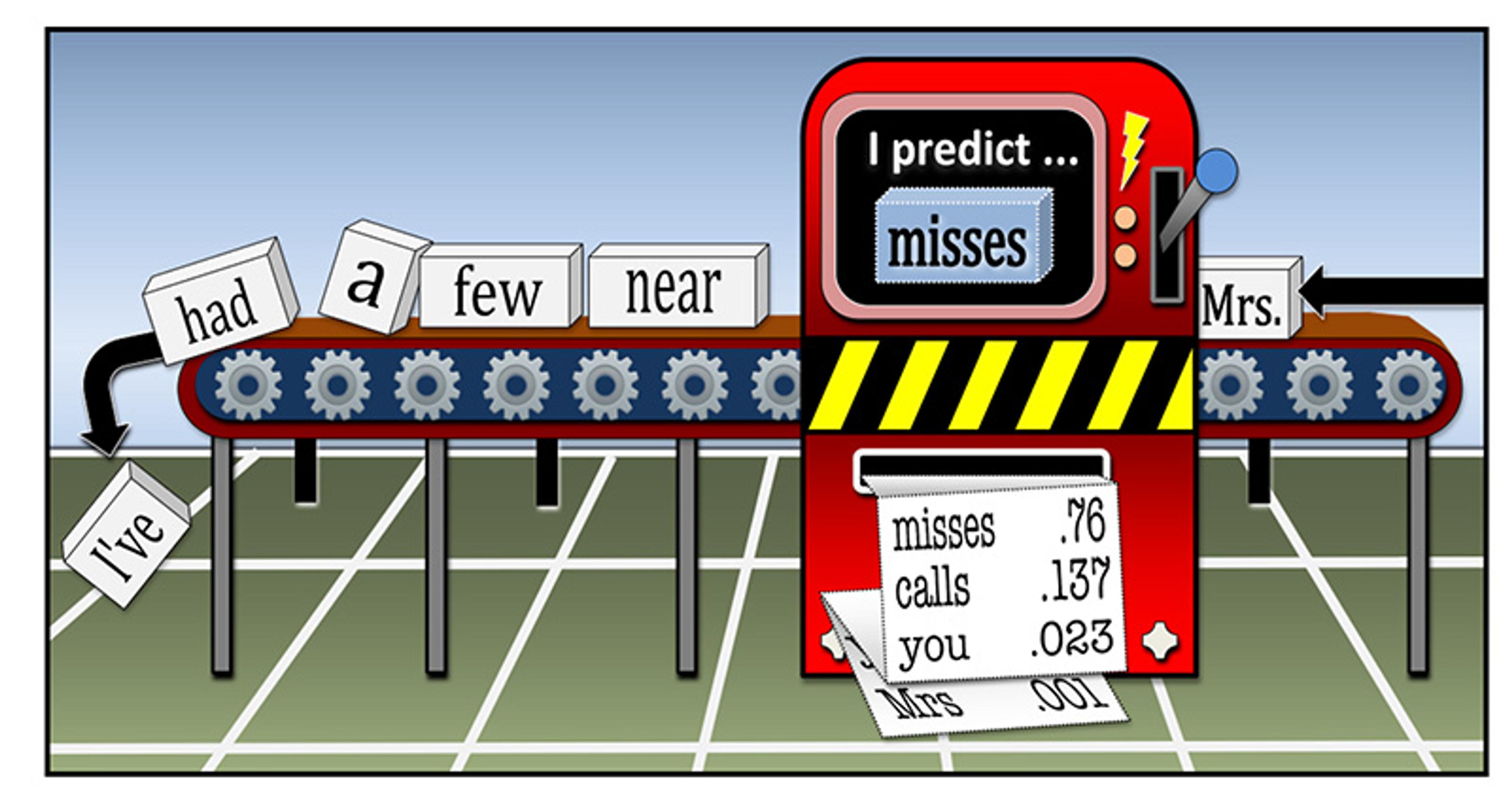

Take the witticism ‘I have never been married, but I have had a few near Mrs.’ A good model will predict misses in the last position, and assign a vanishingly small probability to Mrs. This is the detection stage. For recovery, the sound similarity of the very improbable Mrs to the very probable misses allows a machine to undo the replacement and recover the underlying idiom. To recover the joker’s intent, the machine must also grasp why an abortive marriage is a desirable near-miss. As this requires an emotional understanding of the feelings that are sublimated within a joke, statistical models are currently not so sure-footed on this terrain. However, progress on the humour front will go hand in hand with new advances in reading (and provoking) emotions, and in affective computing more generally. A good sense of humour – whether to recover our meaning in jokes, or to embed its own meanings – is the ultimate test of a machine’s ability to truly ‘get’ us.

A statistical ‘language model’ captures the hidden regularities of speech, allowing our machines to make predictions and identify witty departures from the norm. By applying the same logic in reverse, they can also produce departures of their own. Image supplied by the author

When it comes to writing their own gags, computers are still in the playground. They are fairly deft with puns – recognising ours, producing theirs – but they are still far from mastering the form, as it takes a good sense of humour to tell the accidents from the real thing. It also takes less insight to bend superficial forms, such as spelling and pronunciation, than to bend meanings, so computers often trip up when they play with ideas.

There are many more troll bots than droll bots on the internet because offence is easier to generate, purposefully or otherwise. This is also why machines still do a poor job of filtering online abuse, and why we still need people to police the line between fair speech and hate speech. My own bots, sadly, have caused unintended offence. Playing with language is like playing with a giant box of matches: you think you have anticipated all the possible dangers, and then out of the machine’s linguistic mashups comes a rape joke, a Holocaust joke, or the remark ‘You’re as emotional as a woman.’ We often accuse those who fret about such things of lacking a sense of humour, but the opposite is true: we need a sense of humour to know where to draw the line.

So how likely are machines to become standup comics? Robots can already play-act as standups if we feed them their lines in advance. You can see this with Alexa, as her developers have given her hundreds of ‘Easter eggs’ to discover (try ‘Alexa, can you rap?’ or ‘Alexa, make me a sandwich’). But if you ask Alexa to tell you a joke, you will find that her inventory of canned goods runs to the anodyne. Anthropomorphic robots such as Nao, which resemble large shiny toddlers, fare better on stage with the same kind of jokes because they engage us emotionally. We will them to succeed, as though watching our own kids in a nativity play. My own group uses two Nao robots, called Kim and Bap, to act out shaggy-dog tales of their own creation. But the cute little tykes are always getting us in hot water.

Once, when they acted out their take on Star Wars, Kim-as-Luke fell in love with Bap-as-Leia – then Bap promptly cooked him dinner. Although the robots know nothing of gender stereotypes, their act was labelled sexist. When they later put their own spin on the US presidential race between Donald Trump and Hillary Clinton, Kim and Bap had Trump propose to Clinton. They needed a reason for her to say yes. Finding his good qualities thin on the ground, the robots had Clinton accept because Trump is rich and powerful. They, and us, were then accused by the reviewers of a subsequent paper of portraying women as gold-diggers. If machines shouldn’t say what they can’t fully appreciate – and this goes for humans too – then we must give them the tools to see the world from different angles. That is, we must give them a genuine sense of humour.

But do we really need AIs that do standup? We already have plenty of joke-writing machines of the human variety, and their jobs are not going anywhere. Just as your workmates that are always ready with a witty quip pose no threat to the comedy professionals, AIs with a sense of humour will grease the rails of our everyday interactions, with machines and with each other (via clever add-ons to texting apps and email) but are not going to sell out any arenas with their acts. Rather, as the population ages and we become more reliant on machines in the home, we will need our home-care companions to be, well, more companionable. A sense of humour is more than just a fluency with jokes. It allows us to laugh at other people’s stories, and ease tensions by soothing anxiety. It opens a window onto another person’s values, and is a bona fide of their character. We don’t need our smart robots to be standup comedians. We need them to be standup guys. That’s the best reason of all for AI researchers to take machine humour seriously.