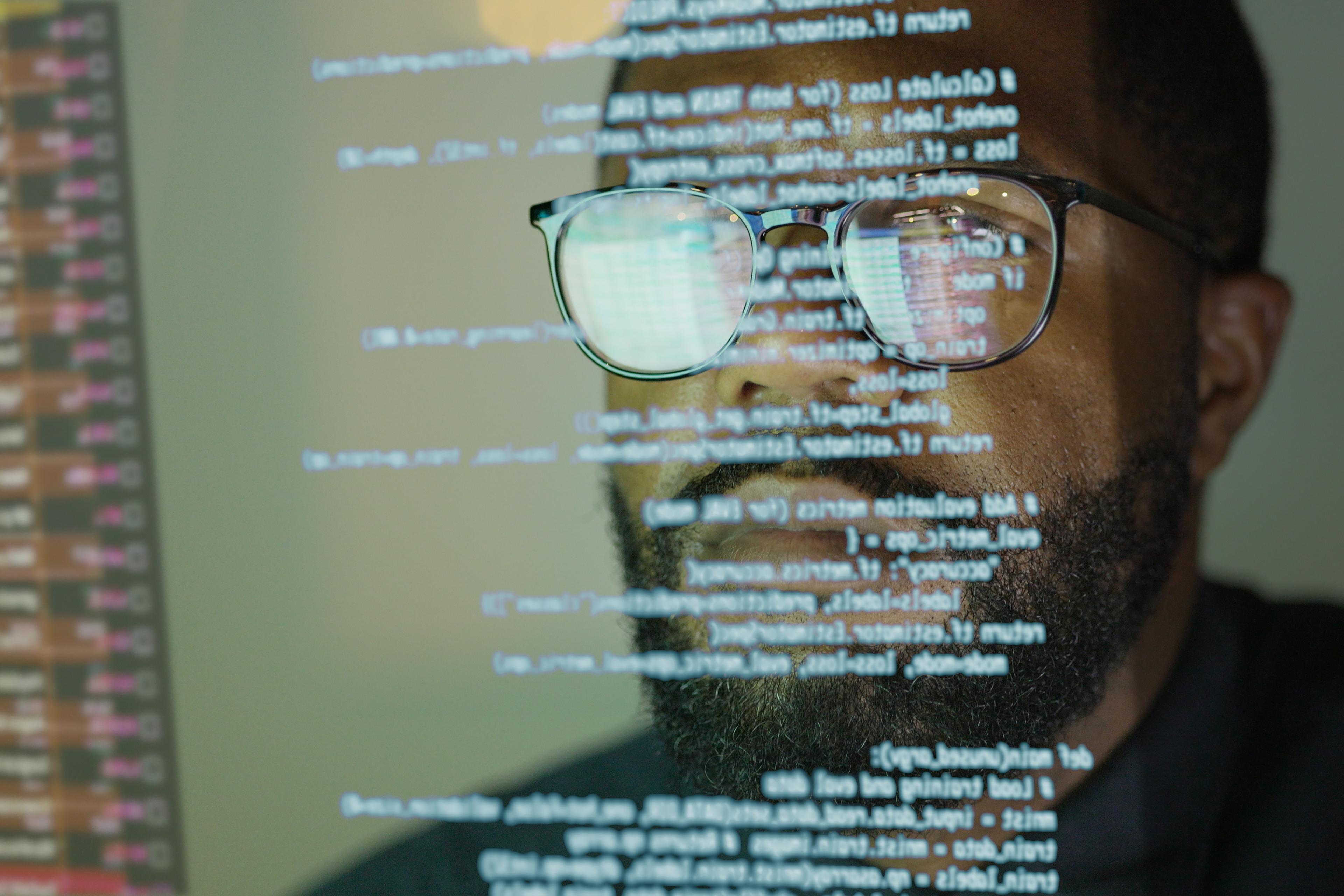

How do we know whether the words we read are a human’s? The question has become remarkably urgent in the face of conversational AI. Google’s Bard, Microsoft’s Bing and OpenAI’s ChatGPT generate realistic essay responses to our queries. The revolution in natural language processing has spurred doubts about what’s artificial or authentic – doubts that leave critics anxious not just that people could feign the platforms’ output as their own. Allegations abound that conversational AI could also jeopardise education, journalism, computer programming and perhaps any profession that has depended on human authorship.

Yet such scepticism is hardly new. Doubts about what lies behind words are baked into the nature of conversation. Indeed, human interaction continuously collides with the limits of our ability to know what another really means. Large language models offer a timely opportunity to reflect on how we already navigate everyday conversation. Natural conversation – and not only technological solutions – offers us a guide to relieve the doubts posed afresh by conversational AI.

The doubts at issue cut deeper than words’ accuracy. To be sure, large language models have a track record for turning out inaccuracies – or ‘hallucinations’ as they’re called. During an embarrassing public debut, Bard incorrectly stated that the James Webb Space Telescope took the first image of a planet outside our solar system. (The European Southern Observatory’s Very Large Telescope already did so in 2004.)

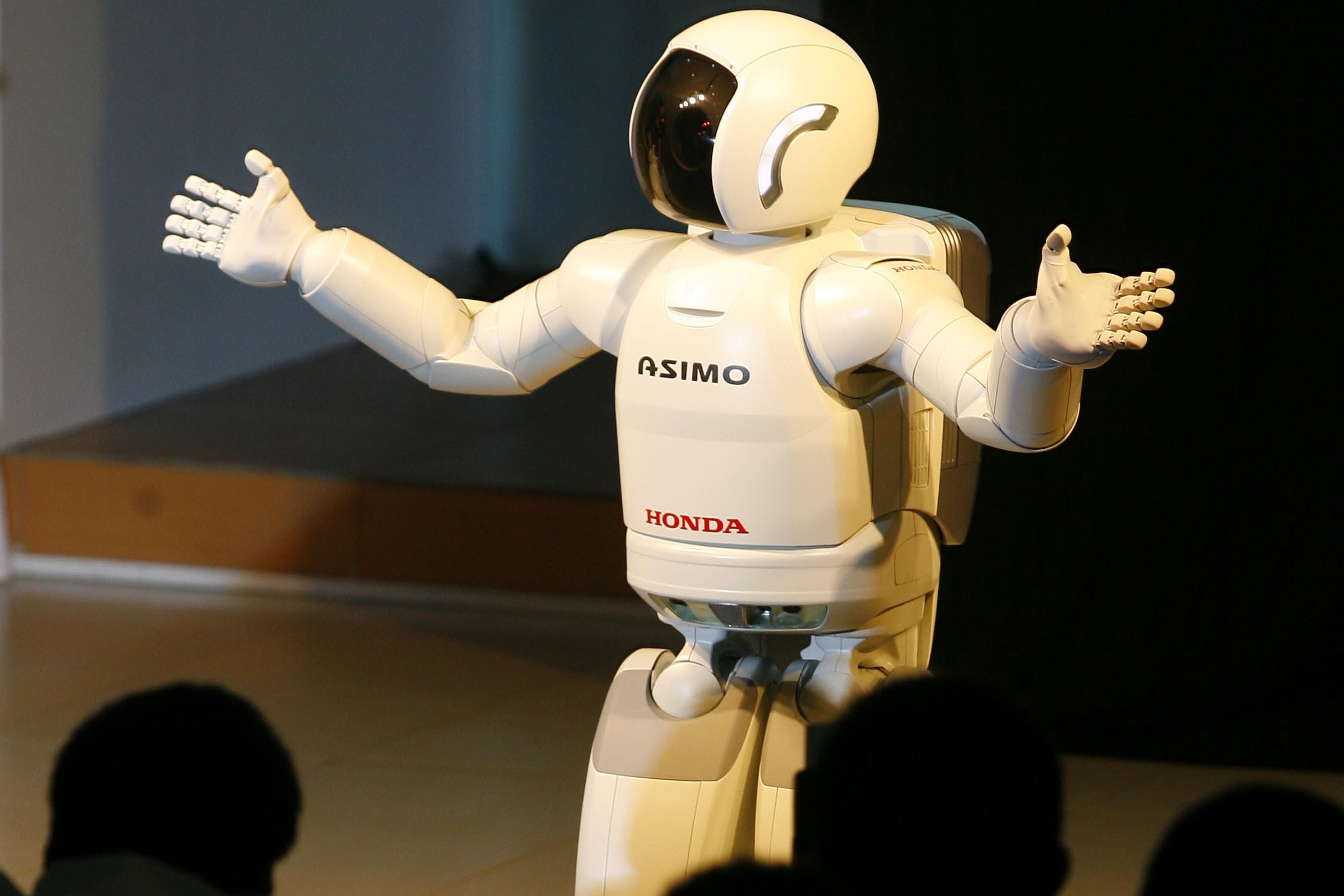

Deeper are doubts about the meaning behind words. When I confront text generated by ChatGPT, it can be genuinely difficult to know whether its author is a person or a robot. It might seem that the line between human and machine blurs now more than ever. In fact, however, conversational AI magnifies the uncertainties that already vex natural conversation.

Consider a familiar scenario. I receive a text message before a date: ‘So sorry, I’m sick. Won’t be able to make it tonight.’ Scepticism strikes. Is she really sick? How can I know with certainty that her illness is not a white lie meant to spare my feelings rather than admit that she no longer wants to see me? Short of a doctor’s note, I’m without any assurance that my date’s not reciting a polite cultural script. Whereas everyday conversation leaves us anxious that words could be robotic, conversational AI leaves us doubtful as to whether words are truly human.

Scepticism about what lies on the other side of words hinges on conversation slipping from authentic to algorithmic. In such moments, language feels like a barrier between us. I fear that someone (or something) does not mean what she, he, they or it says. The philosopher Stanley Cavell put it thus: ‘[O]ur working knowledge of one another’s (inner) lives can reach no further than our (outward) expressions, and we have cause to be disappointed in these expressions.’ Our words leave us wanting when we feel that language (written, spoken, bodily) fails to show us something more than it ever could: knowledge of what others think and feel, anterior to their speech.

It might seem preposterous that, as I suggest, rifts in natural conversation are akin to our uncertainties about AI authorship. After all, large language models have brought about stunning technical accomplishments. The underlying transformer (deep learning) model catapulted natural language processing by attending to entire inputs at once. The architecture of the Generative Pre-Trained Transformer (GPT) and Google’s Language Model for Dialogue Applications (LaMDA) does not process individual words in a sequence (as was the case for recurrent neural networks). Attention mechanisms are now deployed to weigh all prior states of text according to learned measures of context and relevance. The result is flexible, non-repeating and human-like.

Reflecting on how we navigate artificial doubts about each other’s utterances nonetheless offers guidance for our scepticism about AI-generated output. The urge to peek around another’s words is hardly innocent. That is because language is normally a public affair. Language provides tools to open ourselves up in a shared space of dialogue. But those tools – like our trust in AI – can break. The rapport we share gives way to doubts about what’s hidden.

The current technological solutions offer one means to quell our human doubts. Researchers at Harvard and MIT developed the Giant Language Model Test Room, which submits computer-generated passages to forensic analysis. Watermarks are also in development. A cryptographic code embedded into word sequences and punctuation marks aims to provide readers with a certification of text’s algorithmic provenance. When holding a student’s history essay about the French Revolution in my hands, authorial detection would empower me to know with certainty that the student didn’t poach ChatGPT results about the storming of the Bastille in 1789 (written in the style of a college undergraduate).

When we see conversational AI as a human practice consistent with natural conversation, then the artificiality of our demand for certainty about what lies behind either shines brighter. To the extent that we use words as tools to know what another means, that knowledge is of a particular kind. With words we acknowledge each other. As Cavell put it: ‘acknowledgement goes beyond knowledge … in its requirement that I do something.’ In conversation, we make claims on each other. My utterances are not just communications of facts but also invitations to respond. When deprived of acknowledgment in a shared space, we seek knowledge as consolation in the recesses of another’s mind.

Herein lies the artificiality of natural conversation: that words can wedge themselves between us. Psychologists have studied the ineluctable drive within language to attain knowledge outside it. What results is a false picture that language is the sort of thing that has ‘sides’, leaving an obstacle in place of our mutual understanding.

That large language models afford us doubts about the authorship of their output reminds us that the technology has yet to be fully acknowledged. To make it feel less alien, we should use AI tools more – not less. We might imagine a world in which professors require that students submit AI-generated drafts before their final essays. The classes’ queries would be expected as well. Students would thereby have to distinguish their ultimate thoughts from prior algorithmic versions. In that world, conversational AI would cease to be an endpoint of knowledge and instead participate in the back-and-forth process of human conversation.

Pulling conversational AI into the orbit of natural conversation invites us to see our ethical relationships as the centre of gravity. When I examine a text’s authorship, my goal – strictly speaking – is not to ascertain knowledge of a fact; rather, it is to find someone (or something) responsible for the words. That professors expect students to submit essays written by authentic – not algorithmic – intelligence reflects their meritocratic ambition to grade students on what they intend to write. Knowledge plays a part. Far more important is the cultivation of critical thinking, effective communication and persuasive reasoning – skills with which we come to converse intentionally with others.

We converse intentionally when we can give reasons for our words. According to Elizabeth Anscombe, we answer the question: ‘Why?’ Conversational AI, however, cannot intend words. It predicts words. For Anscombe, as the philosopher Jeff Speaks puts it:

predictions are justified by evidence that the future state of affairs in question will be true, whereas expressions of intention are justified by reasons for thinking that state of affairs attractive.

The LaMDA model trained Google’s Bard on the basis of more than 1.56 trillion words and deployed 137 billion parameters to falsely state that the James Webb Space Telescope took the first image of an exoplanet. Predictions can go wrong. But those of conversational AI can never be intentional.

Through language I find a voice; I take responsibility for myself, and hold myself accountable to others. I could use ChatGPT to write a letter in French. But I’d deny myself an opportunity to speak French and thereby share a world with others when I visit Paris. Conversation is the conduit of community. So, learn French with ChatGPT as an instructor! The practice has already taken off.

To relieve our doubts about conversational AI, we should come to acknowledge it as an appendage to – rather than a replacement for – human interaction. Define ever-more precise use cases. We could use conversational AI to lend momentum – a running start, as it were – to conversation.