Kenny Chow was born in Myanmar, and moved to New York City in 1987. He worked for years as a diamond setter for a jeweller, earning enough to buy a house for his family before he was laid off in 2011. At that point, Chow decided to become a taxi driver like his brother, scraping together financing to buy a taxi medallion for $750,000. This allowed him to operate as a sole proprietor, with the medallion as an asset.

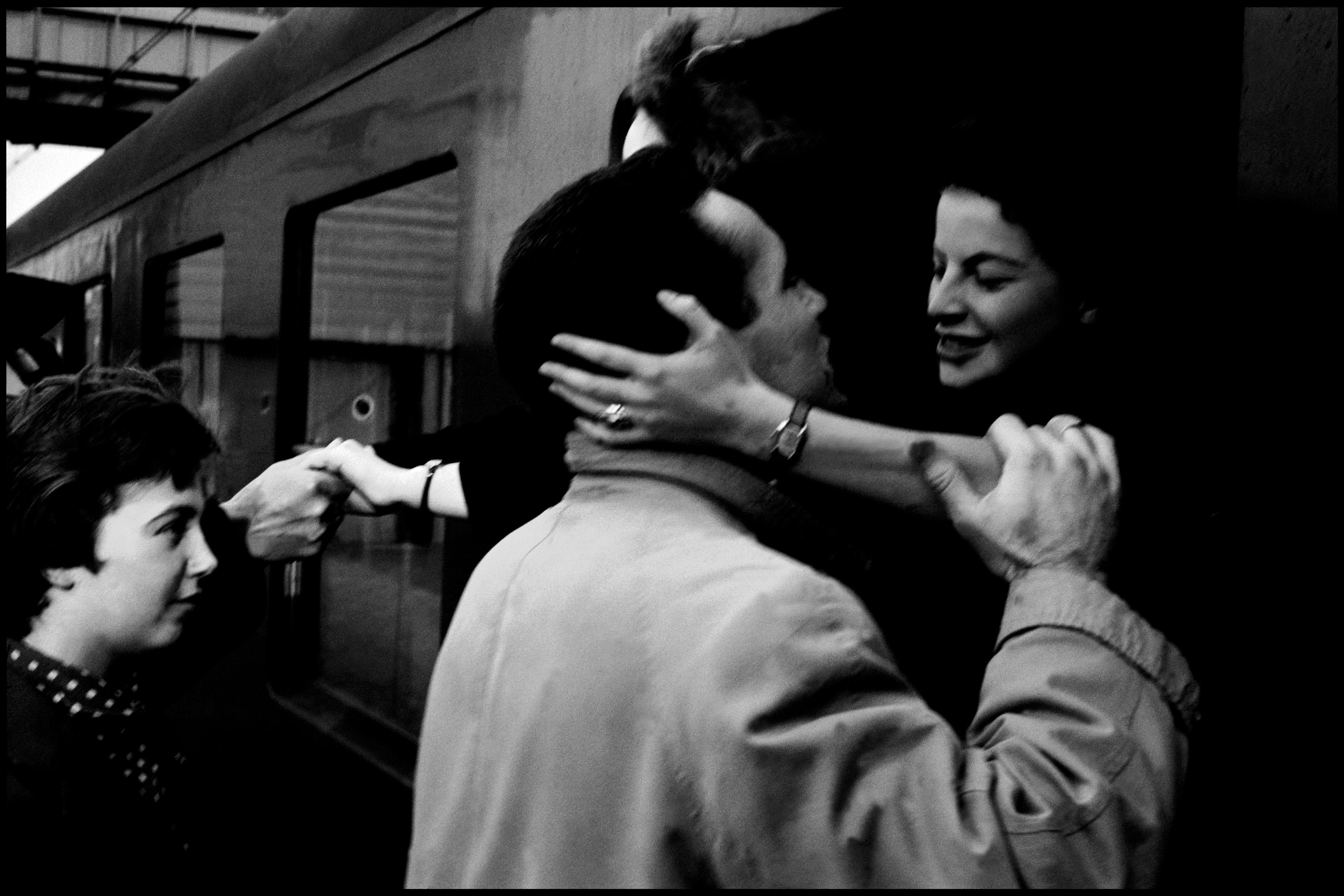

For a while, everything went according to plan, with taxi medallions rising in value to more than $1 million. Then the bubble burst, and along came ridesharing apps such as Lyft and Uber. The value of Chow’s medallion plummeted, and it became harder to keep up the payments on his loan. In 2018, he took his own life.

We’d all recognise that Chow’s situation is unfortunate. But, arguably, he took a calculated gamble when he purchased a risky asset, and so some of us might be tempted to blame him for his own misfortune. According to one school of thought, when these sorts of bets don’t pan out, only the gambler is to blame. That might sound callous, but it’s indeed the attitude that many of us seem to hold, at least in the United States: a 2014 Pew Research report found that 39 per cent of Americans believed that poverty was due to a lack of effort on poor people’s part. When ‘effort’ includes an inability to properly weigh up the risks inherent in a decision, this suggests that, in the end, many of us think that people are responsible for their own bad luck.

I disagree with this view. But my reasons aren’t solely political or moral in nature. Rather, insights from complexity science – specifically, computational complexity theory – show mathematically that there are hard limits on our capacity to make accurate and precise calculations of risk. Since it’s often impossible to get a reasonable sense of what will happen in the future, it’s unfair to blame people with good intentions who end up worse off as a result of unforeseen circumstances. This leads to the conclusion that compassion, not blame, is the appropriate attitude towards those who act in good faith but whose bets in life don’t pay off.

The starting point is to note that, for people to be held responsible for their actions, they have to know about certain features of the world. In many cases, even this minimal condition for blameworthiness isn’t satisfied. For example, Chow would have struggled to predict that the rise of ridesharing apps would crater the market for taxi medallions in New York City – but so, too, did most of us. By their very nature, technological disruptions are difficult to foresee; if they were easy to predict, early investors in these technologies wouldn’t get so rich. Such a low bar for blameworthiness seems too harsh to be plausible; how can any of us be blamed for failing to spot trends that almost no one was able to see, despite the significant material incentives for doing so?

The bar for blameworthiness can be made more precise by saying that, in order to be blamed for a gamble, people must possess an accurate causal model of the system in which they act. That is, they must know how different variables in the system do or don’t affect one another. Chow’s bet on a taxi medallion went poorly because of a complex causal nexus of speculation and technological development that led the price for taxi medallions to rise steadily before falling precipitously. To predict the collapse in the price of taxi medallions without the benefit of luck, one would need to have a crisp picture of this labyrinthine causal structure.

Here’s where computational complexity theory kicks in. It turns out that learning the causal structure of real-world systems is very hard. More precisely, trying to infer the most likely causal structure of a system – no matter how much data we have about it – is what theorists call an NP-hard problem: given a general dataset, it can be fiendishly hard for an algorithm to learn the causal structure that produced it. In many cases, as more variables are added to the dataset, the minimum time that it takes any algorithm to learn the structure of the system under study grows exponentially. Under the assumption that our brains also learn by running algorithms, these results apply to human reasoning just as much as they apply to any computer.

One way to get around these constraints is to assume that the real world has a relatively simple causal structure: for example, we could assume that no variable in a system (say, the price of oil) depends on more than two other variables (say, demand and supply of oil). If we restrict the possibilities in this way, then estimating a causal structure becomes less difficult. Such heuristic approaches are a crucial part of how humans actually form beliefs, as the philosopher Julia Staffel has argued. However, treating a complex system as though it’s simple is a dangerous game; heuristics can misrepresent the world in consequential ways. Indeed, the unpredictability of the course of our lives is partly due to rich causal complexity of the social world, with its interlocking web of economic, political, psychological and other factors. Under these conditions of extreme complexity, which are typical of most real-world systems, it’s rarely the case that people can ever meet the bar for blameworthiness described above.

A better way for a person to deal with the overwhelming complexity of the social world is to hedge their bets. By investing so much of his worth in a taxi medallion, Chow put all his eggs in one basket. So, you might say, he made himself particularly susceptible to ruin. What individuals should do instead, perhaps, is pursue a diverse range of offsetting strategies that eliminate or drastically reduce the risk of catastrophe, even under conditions of severe uncertainty.

The problem is that much of economic and social life in affluent countries is structured to require individuals to commit most of their resources towards one strategy for pursuing a flourishing life. Taking out a student loan or mortgage, or buying a taxi medallion, are all strategies that require a large, if not total, commitment of a person’s financial resources. Here, real hedging would require us to start from a place of considerable wealth, and so it isn’t a viable strategy for many. Most of us remain consigned to placing big bets in a casino where it’s effectively impossible to know the underlying odds. The precarity of this situation means that compassion, not blame, is the appropriate attitude to have towards those who end up on the losing end of these bets.

Questions about the attitudes that we ought to have towards others are psychological and moral in nature. But they’re also politically significant. How we regard those who end up less fortunate informs how we address social inequality, and the extent to which we care about it. This brings us back to Kenny Chow’s death, and indeed, the deaths of so many others. Since the year 2000, life expectancy in the US has fallen, as the economists Anne Case and Angus Deaton have shown – and this decrease has been driven almost entirely by an increase in what they call ‘deaths of despair’, such as drug overdoses and suicides. Despair thrives where empathy is missing; right now, our lack of compassion for one another is killing us.

Reversing this trend requires a policy response, but it also requires a shift in our attitudes towards those who end up worse off as a result of risky but well-intentioned decisions. The limits of our ability to infer the complex causal structure of the social world lead directly to the conclusion that blame isn’t appropriate. No matter how smart we think we are, there’s a hard limit on what we can know, and we could easily end up on the losing end of a big bet. We owe it to ourselves, and others, to build a more compassionate world.

Published in association with the Santa Fe Institute, an Aeon+Psyche Strategic Partner.